Anubhav: recognizing emotions through facial expression

Swapna Agarwal, Bikash Santra, and Dipti Prasad Mukherjee

Electronics and Communication Sciences Unit, Indian Statistical Institute, 203, B. T. Road, Kolkata 700108

{agarwal.swapna, bikashsantra85, diptiprasad.mukherjee}@gmail.com

Abstract

We present a computer vision-based system named Anubhav (a Hindi word meaning feeling) which recognizes emotional facial expressions from streaming face videos. Our system runs at a speed of 10 frames per second (fps) on a 3.2-GHz desktop and at 3 fps on an Android mobile device. Using entropy and correlation-based analysis, we show that some particular salient regions of face image carry major expression-related information compared with other face regions. We also show that spatially close features within a salient face region carry correlated information regarding expression. Therefore, only a few features from each salient face region are enough for expression representation. Extraction of only a few features considerably saves response time. Exploitation of expression information from spatial as well as temporal dimensions gives good recognition accuracy. We have done extensive experiments on two publicly available data sets and also on live video streams. The recognition accuracies on benchmark CK+ data set and on live video stream by our system are at least 13 and 20% better, respectively, compared to competing approaches.

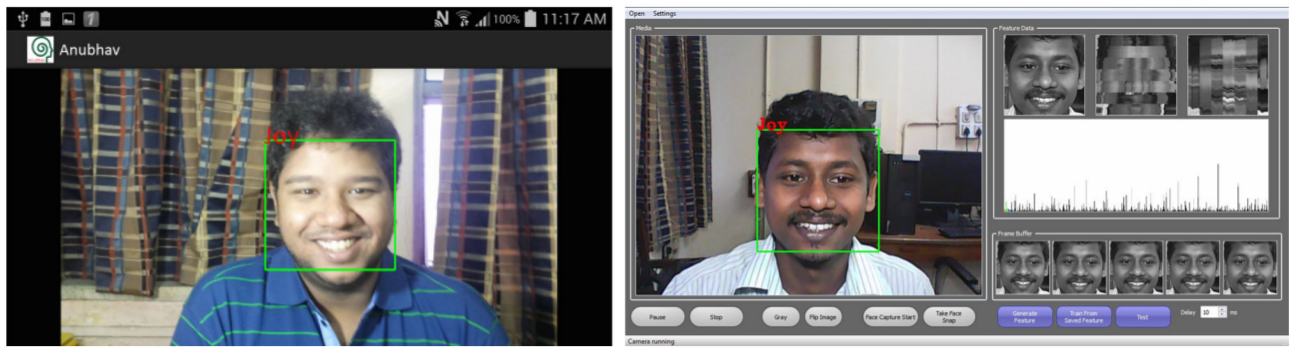

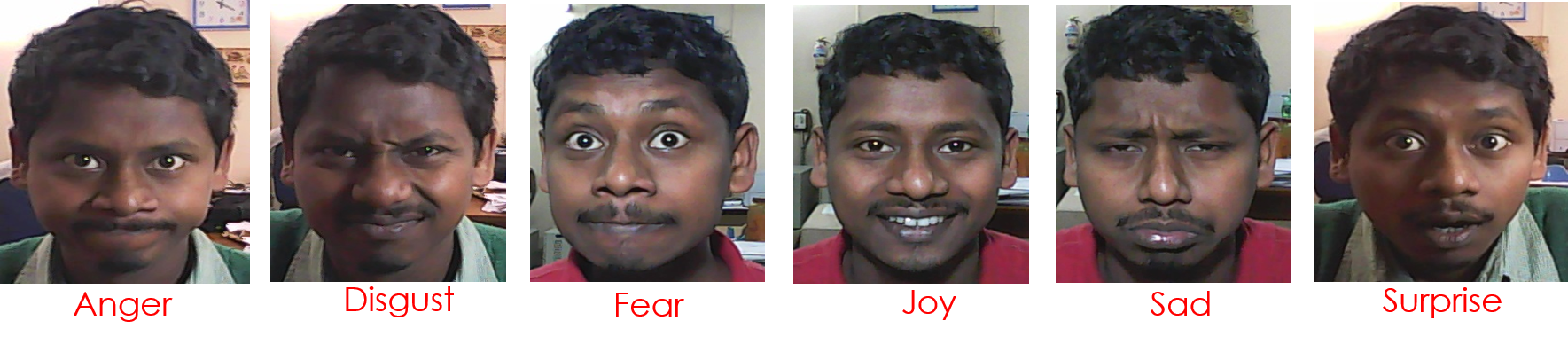

Anubhav Android Application

The Anubhav android application can be downloaded by clicking here. In order to run Anubhav in android OS, opencv manager android app needs to be installed in the phone beforehand. In order to recognize facial expressions in real time, Anubhav captures faces only from back camera of the phone. Moreover, the phone should be oriented in landscape mode. Anubhab app is able to identify only six basic expressions: anger, disgust, fear, joy, sad, and surprise. The prototype of those six basic expressions are demonstrated in the following figure:

Anubhav for Windows OS

The demonstration of Anubhav on Windows OS can be seen in YouTube: click here.

Reference

[1] Agarwal, S., Santra, B. and Mukherjee, D.P., 2018. Anubhav: recognizing emotions through facial expression. The Visual Computer, 34(2), pp.177-191.